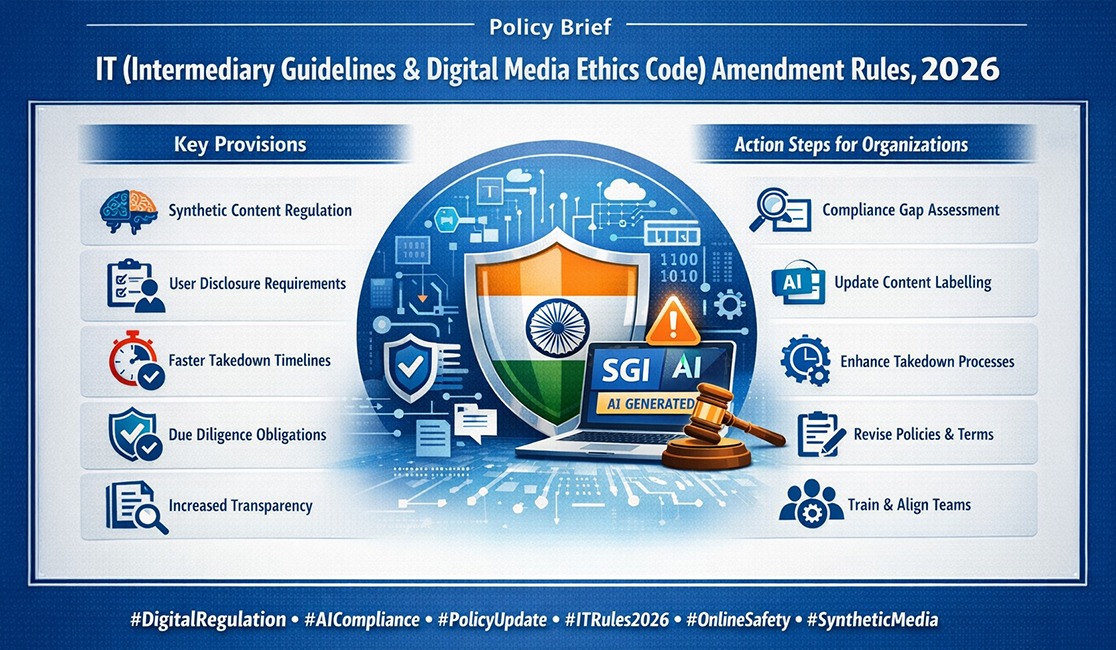

Intermediary Guidelines & Digital Media Ethics Code

The IT (Intermediary Guidelines & Digital Media Ethics Code) Amendment Rules, 2026 introduce important compliance changes for digital platforms operating in India. The amendments focus primarily on synthetic or AI-generated content, faster takedown timelines, and stronger accountability mechanisms for intermediaries.

Below is a clear summary of the key provisions and what organizations should be preparing for.

Key Provisions

- Regulation of Synthetic (AI-Generated) Content: The Rules formally recognize artificially generated or altered audio, video, and images that appear authentic. Platforms that allow such content must ensure it is clearly identified as synthetic. This includes visible labels and embedded identifiers that cannot be removed.

- User Disclosure Requirements: Platforms may be required to obtain declarations from users on whether content has been artificially generated or modified. Reasonable systems must be put in place to verify and enforce these disclosures.

- Shorter Takedown Timelines: The amendments significantly reduce response times. Certain government-directed removals must be acted upon within hours. Non-consensual intimate imagery must be removed on an urgent basis. Grievances from users must be addressed within shorter, defined timeframes. This places operational pressure on moderation and legal teams.

- Strengthened Due Diligence Obligations: Intermediaries must demonstrate active compliance. Failure to meet due diligence requirements could affect eligibility for safe harbour protections under the IT Act.

- Increased Transparency: Platforms must periodically inform users about prohibited content categories and the consequences of violations.

What Organizations Should Do Now

- Conduct a Compliance Gap Assessment: Review current policies, content moderation workflows, AI deployment practices, and response timelines against the amended Rules.

- Update Content Labelling Systems: Ensure mechanisms exist to clearly label AI-generated or synthetic content. Review metadata practices to ensure traceability where required.

- Strengthen Takedown Processes: Reassess internal escalation frameworks to meet compressed response deadlines. This may require 24/7 response capabilities.

- Revise User Terms and Policies: Update platform policies to reflect new disclosure requirements and enforcement consequences.

- Train Teams and Align Governance: Legal, product, trust & safety, and engineering teams should be aligned on new compliance responsibilities.

Strategic Implication

The 2026 amendments signal a move toward tighter oversight of digital content ecosystems, particularly AI-enabled content. Compliance is no longer reactive; it requires structured systems, documentation, and demonstrable accountability.

Organizations that proactively integrate these requirements into governance, product design, and risk management processes will be better positioned to maintain regulatory protection and user trust.

To get latest updates,

Join our WhatsApp Community

Follow our WhatsApp Channel